Check out the latest javascript, tech and web development links from the daily linkblog.

-

The blog has moved

blogging writing

I have had to migrate my main homepage and as part of the move I decided to consolidate the blog, linkblog and podcast sites into the new website.

There is also a latest page where you can see the newest content from all 3.

-

Alternative Nations

blogging writing

I got inspired while listening to this excellent mix - Maybe you can guess where exactly, listen as you read

Where do you come from?

Hmmm, well I come from maaaany places…

I come from jazz

I come from rock

I come from folk

I come from pop

I come from heavy metal

I come from grunge

I come from punk rock

I come from ska

I come from britpop

I come from hip hop

I come from gangsta rap

I come from breakbeat

I come from drum and base

I come from house

I come from ambient

I come from disco

I come rave

I come from acid

I come from reggae

I come from dub

I come from dancehall

I come from bangra

I come from live music

I come from radio

I come from music festivals

I come from carnivals

I come from parties

I come from after parties

I come from after after after parties

I come from Ibiza

I come from noise

I come from experimental

I come from podcasting

I come from pubs

I come from riverboats

I come from front of house

I come from kitchens

I come from chefs

I come from chalkboards

I come from daily menus

I come from split shifts

I come from sledging

I come from skiing

I come from snowboarding

I come from tennis

I come from camping

I come from basketball

I come from baseball

I come from javascript

I come from css

I come from html

I come from sql

I come from bash

I come from perl

I come from python

I come from java

I come from c++

I come from linux

I come from unix

I come from irix

I come from windows

I come from engineering

I come from science

I come from feature film visual effects

I come from software startups

I come from software development

I come from web2.0

I come from APIs

I come from user generated content

I come from bit torrent

I come from copy left licensing

I come from gnu

I come from free software

I come from open source

I come from blogging

I come from photo journaling

I come from videoblogging

I come from hacking

I come from making

I come traveling

I come from road trips

I come from digital nomadism

I come from Europe

I come from the Middle East

I come from the USA

I come from Canada

I come from South East Asia

I come from bad relationships

I come from sobriety

I come from COVID

I come from loving life

I come from racism

I come from abuse

I come from exclusion

I come from blocking

I come from surveillance

I come from police (fake?) brutality

I come from security guard / tuk tuk / taxi / delivery bike mafia intimidation

I come from stalkers

I come from capture gangs

I come from exploitation

I come from conspiracies of silence

I come from an infinite list of contradictions

I come from years of continuous and co-ordinated daily harassment

I come from starvation

I come from thirst

I come from torture

I come from crucifixion

I come from homelessness

-

The occasional listening issues of my head

blogging writing

I previously wrote about reading and memory issues I some times have. Around about the same time I wrote that, I also wrote another piece about audio listening issues. I never published it though. This week I’m looking for blogging topics, and maybe it helps someone out there, so here it is.

Similar to my occasional reading issues, I’ve noticed that I also at times have issues listening to audio. It takes a very similar shape to the reading issues, in that it feels like some sort of buffering is happening.

I can hear all the words and I’ll be listening attentively. Then I notice that I’ve sort of blanked out for some amount of time, and I can’t remember what I’ve just been listening to. I then have to rewind to a place I recognise, sometimes it’s quite a long ways back, occasionally a minute or two, but usually several seconds.

It also happens when my mind is being triggered by the audio I’m listening to. I will start to formulate lots and lots of questions about what I’m listening to, and in doing so I loose my place in the ongoing audio. Mood also has an affect.

At times it’s annoyingly small side thoughts that totally derail my listening, I’m left trying to remember what I was listening to, and I simply can’t remember, conscious that the not remembering is causing me to miss yet more stuff because the audio I can hear is basically going straight to the void. And so it gets even worse.

At times it gets so bad that the only way I can absorb anything at all is to write copious amounts of notes. I have to pause and rewind, continuously and write notes, so I understand what’s going on, but I loose the flow of the overall audio, and I suspect that it sort of spoils my long term memory of the piece, because a lot of the subtleties and nuance get clobbered.

Taking a break helps a bit, and having food + something to drink. In case it wasn’t already abundantly obvious starvation and thirst does not help one bit, something which I have been unfortunate enough to verify.

-

The HTML5 Phone

blogging writing

I’ve been having this re-occurring thought. I don’t know how feasible it is, but I wanted to write about it just in case. Wouldn’t it be awesome if there was an HTML5 phone?

The HTML5 phone would have all it’s UI built from HTML, CSS & Javascript, would likely run on a minimal Linux distro. Native apps would run in a custom NodeJS runtime, perhaps using something like Electron. It would be great for web developers, with the possibility to develop for the phone, on the phone. It could also leverage the web platform via PWAs. There’s a lot of variation possible, but the focus would be on HTML5 everywhere.

I think there are a lot of current trends that could make this crazy pipe-dream a reality that could gain traction:

- Recent big push towards open web standards

- Move to privacy, phone OSs like Purism, the e/OS phone

- More alternative custom javascript runtimes

- Phone hardware is fast

- There are a lot of web developers out in the world at this stage

Wouldn’t a progressively enhanced OS be cool? Always know, that the basic apps you need will always work, no matter what phone you have.

It might not even be that difficult to start. Initially just create a bare minimum suite of apps, progressive enhancement style, that just do the very basics of the following apps:

- Browser

- Camera

- Music

- Video

- Notes

- File Explorer

- Contacts

- Messaging

- Calling

Build them as super simple web apps first. Once there’s something functional, enhance as needed, port to native apps etc.

The most difficult app is probably the web browser. I had initially thought that you could just have a browser and do everything with PWAs, but I think it’s sensible to have native apps to make sure you’re not forced to wait for web platform features.

Anyhow some interesting posts about creating browsers that I found, just to get a sense of what’s involved:

- How to get started building a web browser?

- How to build a web browser – Part 1: Specifications

- Building a Web Browser Using Electron

Yeah I know it’s a sort of crazy half baked idea, but I just keep thinking how awesome it would be, even if it was quite rough around the edges initially.

As a web developer, I’d like to have more control around my phone / mobile / tablet experience, so why not just do it all in HTML5?

-

OS Progressive Enhancement

blogging writing

I’m trying to get back into the blogging flow after a period of not writing much, so the next few posts are likely going to be a bit light on well-thought-outness, which isn’t even a word, but whatever. The enemy for me right now is getting bogged down, just trying to keep moving…

I really like the practice in web development to create websites using progressive enhancement. Essentially you first create a bare minimum app using mostly HTML, and platform features, make sure that works, even if it looks a bit weird. The key thing is that functionality-wise it’s operational.

Then you enhance that with styling using CSS, and improve the functionality using javascript. This ensures that in pretty much any situation, even if for some reason the CSS and Javascript fail to load or get blocked, you still have an operational website.

I’d like to have something similar with Operating Systems. I’d like to know that no matter which OS I have to use, be it Desktop, Laptop or Mobile, that the default apps provide a minimum of functionality so I can at least do basic things.

Major apps like contacts, notes, browser etc could have minimum functionality specs, so you could quickly see where you could move to and still be functional.

Slightly scattered thoughts, but the gist of it is progressive enhancement for operating systems.

-

The Big Tech Sandwich

blogging writing

Sandwich

- Tech

- Culture

- Religion & history

Background

I recently wrote a series of newsletters where I explored the idea of a Big Tech Sandwich. A mental model for how to think about tech in the most broad context, how to handle the mess that is society. Hopefully it’s helpful in moving things forward so that we can make the world a slightly better place for the next generation. I personally have found it useful.

This blog post is a sort of summary, to have something I can point to in the future. I’m going through a moderately severe case of writers block the past few weeks, combined with very patchy internet access, which isn’t a great combination. Consequently this post is unlikely going to be a super smooth read, but I think the ideas are interesting. Consider this a v1, I’ll update later, better to have something published than nothing at all.

These were the newsletters:

- Mid-week special: Navigating the big tech mess (Wednesday 27th April, 2022)

- The full full stack, and the weight of history (Saturday 30th April, 2022)

- The Big Sandwich (Saturday 7th May, 2022)

- Culture Smash (Saturday 14th May, 2022)

- Demons be gone (Saturday 21st May, 2022)

- Mid-week Special: Dilemma Addendum (Tuesday 24th May, 2022)

A quick note about culture because it means different things to different people. When I’m speaking about culture here I’m mostly thinking about culture of society rather than something like company culture. They are similar and related, but I’m thinking more about music, art, movies, books in general, with large movements that develop mostly organically.

Scale

Where is tech in the bigger picture? We that spend most of our time in the tech industry have a tendency to over play how important we think tech is in society. My observation is that, yes things are complicated, and yes we’ve made a lot of progress, but things are a mess, much messier than is immediately obvious. When you start looking around it’s a bit unnerving to realise. Things are a mess.

But wait, it gets worse, technology is really just a small part of our overall culture. We have some effect on it, but culture is huge, and guess what? It’s also a mess, a really big mess actually.

As you zoom out some more, the timescales increase dramatically. You soon get to the scale of history and religion. You realise how small tech is in the overall picture. Oh yeah and by the way, you thought tech and culture were a mess, well let me tell you, things get mind bogglingly messy at this level. It’s literally unbelievable that we are all living side by side in this small rock we call Earth.

Society, culture, history and even religion play a much much bigger role than we like to admit to ourselves. This sort of stuff is absorbed automatically as we grow up, we don’t even notice a lot of it.

Culture is important, but very difficult to get right. It can help us build temporary scaffolding around difficult areas of our shared history. It gives us the ability to move forward in the present while being informed by the past, but without getting too bogged down. It’s by no means perfect, there are a lot of bumps in the road in some places.

I wrote quite a bit about the example of how popular culture helped to integrate very different parts of UK society. That issue:

- Culture Smash (Saturday 14th May, 2022)

I also wrote a related but different piece a bit earlier which is relevant:

- Punk Rock Internet (Saturday 19th March, 2022)

The future

Things are actually getting better. When you stand back and see the bigger picture over thousands of years, it’s apparent that things are getting better. The large empires of the past that ruled in very violent ways are becoming much more modern, slavery has been abolished, we collaborate across borders, in different languages, build things together, and we do it using tech.

It’s difficult though because we have all these tough histories that we’ve all been through. There are some very scary things that happened, and for many it’s still horrendous. Some people have a lot, others don’t have much.

So that’s my first attempt at a summary, it glosses over a lot. To get a better sense you really need to read some of the newsletters, and listen to some the podcasts linked in those issues. I encourage you to do that, it’s fascinating stuff. We can get culture moving and popping again, and in even more diverse ways. It might even be fun.

It’s a mess, but slowly, one step at a time, we can together make it better for the next generation and ourselves.

-

Budism vs Christianity - WTF World?

blogging writing

Fat Budha: Abundance!

Jesus nailed to cross: Everything is my fault!

WTF world? Seriously.

-

The good homeless person’s (and society’s) dilemma - Addendum

blogging writing

An additional thought to the post from a few days ago.

The non-homeless man is possibly part of a Capture Gang - See section about audience capture for details.

-

The good homeless person’s (and society’s) dilemma

blogging writing

A homeless person has a series of daily run-ins with a non-homeless person, where the non-homeless person is unknowingly doing something that puts the homeless person in some sort of danger. The danger could lead to a bad thing happening that has the potential to eventually cause the homeless person’s death.

The homeless person tries each day to tell the non-homeless person that he’s being dangerous but the non-homeless person simply does not see the danger.

After a few days the non-homeless person realises what it was that he was doing that was dangerous and stops doing the dangerous thing.

Meanwhile everybody else has stopped giving the ‘crazy’ homeless person money, because they don’t want to incentivise his ‘crazy’ behaviour, so he’s been starving and thirsty for several days.

The homeless person essentially had to pay in advance to fix somebody else’s problem.

Eventually, if this sort of thing keeps happening, the homeless person doesn’t have enough flesh on his body and so he dies.

Also, the non-homeless person might be lying.

-

Npm installing multiple private Github repos using ssh aliases

blogging programming

When you are developing in NodeJS it’s often useful to be able to install private modules.

The main ways to do that are:

- Github Packages using Personal Access Token (PAT)

- Private Github repo using Personal Access Token (PAT)

- Private Github repo using Deploy Keys

Apparently (1) is the most popular among developers, however it requires setup and management of additional workflows to create the Github Package.

(2) Only works for a maximum of 1 private repo.

(3) Works for 1 private repo, and can be extended using ssh aliases to work with multiple private repos.

Deploy keys are also more granular in terms of security than PAT.

This article focuses on method (3).

Github has an example repo that demonstrates how to install a private repo using deploy keys. It’s worth testing that out.

If you try the same methodology for installing more than 1 private repo in the same workflow, you’ll run into an issue. The reason being that Github doesn’t allow you to re-use the same deploy key across repos, and by default npm will use the default ssh private key (~/.ssh/id_rsa), of which there is only one.

However if you configure ssh to use aliases, you can specify a different private key for each alias, and then use those aliases in your package.json to specify the private module dependency.

Read more details about how to do that in the Github docs.

For example if you had 3 private repos:

- username/repo1

- username/repo2

- username/repo3

Where repo1 installs both repo2 and repo3, then add an ssh config ($HOME/.ssh/config) as follows:

Host github.com-repo2 Hostname github.com IdentityFile=/home/user/.ssh/private_key_repo2 Host github.com-repo3 Hostname github.com IdentityFile=/home/user/.ssh/private_key_repo3Then specify the modules like so in repo1’s package.json:

"dependencies": { … "repo2": "git+ssh://[email protected]:username/repo2", "repo3": "git+ssh://[email protected]:username/repo3", … }It’s important to specify the protocol correctly. Without ‘git+ssh://‘ the install completes but none of the files actually get installed. Instead some type of symlink gets created. At least that’s what happened in my tests.

Store your private keys in Github secrets, and before your install step, create the private keys on the filesystem from those secrets:

/home/user/.ssh/private_key_repo2and

/home/user/.ssh/private_key_repo3Now in your repo1 workflow you should be able to install all repo1 dependencies using:

npm installIt’s worth having a verify step after install that lists the installed files using ls -l and/or tree.

-

Infected leg wounds

blogging health

I’m still battling with infected leg wounds, but things are a bit better than earlier in the week. I can at least sort of walk now.

What happened? I don’t fully know.

A month ago, a stranger nonchalantly walked up to me in a park, touched my right knee, smiled and walked off. Very weird.

A couple of days later that exact spot started feeling sore. I didn’t think much of it, but I ran out of disinfectant, and it rapidly turned into an abscess.

I got bandages and disinfectant, but then ran out again. I had to use moving tape to secure my last 2 bandages. I was able to get to a pharmacy but the moving tape created more sores, and those turned into more abscesses.

Somehow the abscesses jumped across to my other knee. The whole knee ballooned with one absolutely giant abscess. Over the past few days that has turned into a strip of abscesses that looks oddly like an upside down exclamation point. What are the chances?

Earlier in the week I was worried it might permanently affect my mobility, I could barely walk. I’m now worried about scaring. An improvement but not much better.

Dressing and cleaning these sores has been close to some of the most intense pain I’ve ever experienced. Having to spend literally hours at a time squeezing out puss, with the same intensity and force as an arm wrestle.

Something similar happened to me in India a few years ago, I’ve got a small scar from that experience. Looks like I’ll have a bigger scar this time.

There’s been a lot of unpleasant reactions from people, from mild racism to people finding it hilarious seeing a foreigner in pain.

I know these are a small group overall, but as with trolls in online comments, they can make it seem like the whole world is against you. I’m both mentally and physically bruised at the minute.

You might be wondering: Are you ok?

My response: No not really.

-

My brain's favorite passtime

blogging health

My brain: Do this now or I will delete this…

Me: Yes that does sound interesting, I’ll just finish what I was doing first, it will only take a few seconds

My brain: Too late! this has been deleted, haha, that’ll learn you

Me: Shit, what was I doing again? :(

I think perhaps it happens more often when “the external world” is constantly butting in trying to prompt me todo things that I am already about to do. Eventually it devolves into some sort of race condition, and the only thing you can do is walk and move really really slowly like you are a grandad.

Makes you wonder about alternative scenarios for where grandad’s actually come from, which doesn’t help the situation much either.

-

What I love about loud guitars

blogging music philosophy health

I’ve been listening to loud guitars for about 40 years. That’s quite a long time.

I took a bit of a break from listening to music recently. It was for about two years. It wasn’t something that was planned, life circumstances arranged themselves in such a way that listening was more difficult than it had been previously, I was very busy with other things, and I just sort of stopped.

I was still listening to podcasts, but not music. At some point I listened to a podcast that reminded me about my love for music. It was a strange feeling, like oh-yeah-I-used-to-really-love-music, in that moment I realised that I had basically forgotten. It was the strangest thing, similar to remembering a dream.

I’ve since started listening to all sorts of music again, electronic and band / guitar based. Not loads, but some. I’m so glad that I remembered.

I was listening to some great punk/hardcore/indie/metal recently and was moved to try to put into words the way it makes me feel. The following are extracts of what I wrote.

That thing where you feel like you’ve just been plugged into the mains, and all you want to do is shout YES over and over and over again, really fucking loudly.

It’s like you’ve just been injected with the adrenaline of 1000 horses.

And then the bit finishes and you’re like…”God damn”.

It’s odd because the feeling doesn’t happen in your physical body, you can listen to a whole song and not have any physical change, but the feeling is like it’s in some sort of virtual body, that’s somewhere there in the background, that’s momentarily become very very alive.

It also feels like you are inside the sound, which is odd because it’s the sound that’s inside you, but when you hear that guitar none of that matters anymore, whether it’s you or the sound or whatever, because you’ve just been plugged in, that part of you is alive again.

It’s all the music you’ve ever listened to, all the music videos you’ve ever watched, all the live shows you’ve been to, all the late nights, and all the people you’ve shared those experiences with, in that moment of guitar electricity, they are all there alive again.

It’s such a strong feeling that it’s really bizarre to me that some people can listen to the very same sounds and feel nothing. It just sounds like noise or something.

Those were the bits I wrote. I also love listening to quiter music too, but in that moment, it was all about the loud guitars.

I don’t drink alcohol anymore, it’s been about 3 or 4 years now. I’ve noticed that the music is even better, the drum sounds are crisper, the guitars are more alive, for electronic music, everything is much more hightenned. I notice so much more, it’s like the sound is now in HD when before it was SD. I didn’t expect that at all. It’s awesome.

-

What can web developers learn from the industrialisation of farming?

blogging economics programming

Stuff You Should Know published an interesting episode all about the chicken farming industry. It’s a really great piece, I encourage you to go and listen to it.

The truth between Cage-free and Free-range (Stuff You Should Know Podcast)

I was appalled to learn about how horrendous the conditions are for those animals. It’s shocking to learn about some of the practices that we have developed while industrialising farming, that are normal in our societies. It’s good that people are reconsidering some of these norms.

It also got me thinking about our future with the forward march of technological innovation. We are building ever more complex technologies. Here’s the thought process I can’t seem to shake:

-

Anyone can write software

-

We can build virtual environments

-

There exist people that have industrialised extremely cruel animal farming

-

Those people will use similar techniques on other people, it’s going to happen, it’s just a matter of time

It might not be as visually obvious as chicken cages, in fact it’s likely that it would be designed to fit in our societies and appear normal.

How would we detect instances of people being raised using battery farming techniques?

How would we get out of such a situation if we were already in such a situation?

What about related concepts like bullying? How will that be in the future?

Very relevant and really worth listening to, especially if you are into psychology:

The Dangers of Concept Creep (The Art of Manliness Podcast)

I think it’s worth trying to imagine this sort of thing because ultimately the thing we are farming with the world wide web and the metaverse is ourselves.

Let’s imagine a better future for everyone.

-

-

Reading and memory issues I sometimes have

blogging

Difficult to talk/write about this.

Firstly because it’s not something I’m particularly happy to admit, but also because I’ve only recently become aware of it, or at least aware enough to be able to describe it. It’s something I’ve been in some way aware for years, and it might be getting worse, I can’t tell. So what is it?

Sometimes when I read, I lose the ability to read consistently and with ease. It doesn’t always happen. It seems to happen more when I’ve had a turbulent day, something that’s been happening a lot lately. I get into a state where instead of words coming off the page, so to speak, going through my eyes and being perceived by my brain, in a mostly consistent flow, it feels like there is some sort of buffering going on.

Instead of the words just streaming into my thoughts and brain, my brain seems to change into a mode that operates a bit like that quiz show where the contestants answer questions and have to bank the money at the right time or they loose it. I forget the name of that show, it’s with that dreadfully scary red head woman.

Anyway what happens is this. I read several parts of the sentence and at some point a hole chunk makes it through to my consciousness, all in one go, and on to the next bit that gets slurped in the same way. I have to speed up and slow down in just the right way, or bits start to get missed.

I become aware that it’s happening and I kind of feel when the next chunk is going to be ‘let in’, and that screws up the rythm, and so some chunks get skipped or only partially comprehended, and I find that I have to constantly go back and re-read passages to fill in the gaps.

Perhaps it’s got something to do with being tired. But I’m thinking it might be something else. When it’s not happening, when I’m feeling uncluttered and fresh, the buffering thing doesn’t appear to happen, reading is as smooth as ridding a hover board. When it’s happening it feels like someone is turning a tap on and off.

Something that I’ve noticed that is very different the past several months is that I see a lot of people swinging their arms. This sounds really weird, but at some stage a few months ago basically everyone everywhere started a new exercise routine, where they swing their arms backwards and forwards. Some people do it standing still, others do it while they walk. Sometimes they are doing it while walking backwards.

It’s the new trend where I am. I see literally hundreds of people doing this every single day. That wasn’t always the case. I’m not sure exactly when it started happening. Anyway it’s something that people do, a lot, fair enough, but I wonder if it’s affecting my ability to read smoothly because I’m visually seeing this repetitive up down motion over and over again. It might be completely unrelated, but I think it’s worth mentioning.

I wonder if it’s happening to others without them realising. It’s quite subtle, easy to brush off, especially if you operate day to day with things like caffeine and alcohol. I don’t consume alcohol (it’s been 3-4 years now), and caffeine relatively rarely.

I’ve also noticed my memory at certain times isn’t as good as I expect. I’m trying to remember something, I almost remember it, I can just about feel the thought poping into my head, and then it’s gone, sometimes this happens several times before the memory finally makes it’s way through to my conscious mind.

Occasionally I have to get on with something else, to distract myself from myself, and the memory comes back randomly like a callback function that just got delayed by a very slow internet connection, and a temperamental firewall.

Depending on where you are, you might find people around you that know about this will try to ‘hack’ you. That’s something that definitely happens. It’s a good opportunity to practice keeping calm under pressure, or just to surrender and move on to another place.

That’s my best description of the reading and memory issues I sometimes have. Just putting it out there in case it helps someone else that might be having similar problems.

I haven’t found many ways to improve it apart from just not doing anything for a few hours. I guess meditation can be good too. I do that occasionally. These are not always possible options, sometimes you just have to soldier through to get what you are doing done.

-

Typescript makes function declarations difficult to read

programming javascript

I’m not a massive user of Typescript, though I see it could be very useful in some situations.

The main issue is that I think the additional cognitive load isn’t trivial, and so code that you could easily skim through to get a good idea of what’s going on becomes full of little hurdles. Perhaps it’s something you get used to, but I fear it would seriously impact my ability to read and understand code. It decreases visibility.

Having said that, I really like that you can specify the definitions in JSDoc. That’s cool because the code remains regular, fast to read javascript code. If you are wondering about types, just look at the JSDoc, which is always right next to the function declarations anyway.

Though you don’t get the full power of Typescript using it that way, only the type checking. I’d also like to be able to use interfaces and other object oriented features that come with Typescript.

Which brings me to the point of this post. If you are using full fat Typescript, IMO the way to define the types in the function arguments sucks. Reason being that it uses the colon (:) character.

function equals(x: number, y: number): boolean { return x === y; }I don’t like that because the colon character is for objects declarations. Following years of writing javascript objects, my brain’s muscle memory visually associates that character with objects, so when I’m scanning a page I can easily jump to places on the page without having to fully read the code. Using : in function arg type definitions breaks that for me. I find it’s much more difficult to quickly get my bearings in Typescript code.

I’m also not a fan of long function argument lists and Typescript doubles or triples the length of function argument lists. I feel that function declarations need to be short and to the point. It makes reading code much easier. I don’t find that having the return type is very useful in the function definition. It just feels like clutter to me.

Some people say they like to know the types before they get into the function. Personally I find that once you start reading the code inside a function, it’s usually very obvious to differentiate between objects, arrays, strings, numbers etc. If I want to know the type, it’s preferable to glance at the JSDoc for the function.

There’s a proposal to bring some Typescript syntax to javascript. Personally I’d like it if the type definitions were kept separate, keep function declarations readable! But I would like javascript to have interfaces and additional OO features.

-

Creative Economy vs the Cruelty Economy

philosophy blogging economics

Some thoughts on these two concepts, don’t take these as fact, just some observations, might lead to something interesting, or maybe not.

In the cruelty economy you are rewarded for receiving and disposing of cruelty. People optimise for not being hurt, not getting angry, for catching the purpotraiters of cruelty. Things tend towards a imprisonment.

In the creator economy you are rewarded for receiving creativity and releasing it. People optimise for maximising the creativity, even bad stuff can be good. Things tend towards anarchy.

Things go bad when your capacity to process is exceeded. Creativity turns to cruelty, but perhaps also cruelty turns to creativity.

Real true anger is a capacity problem, it’s the group mis-managing capacity.

It’s a sort of symbiosis, perhaps similar in concept and behaviour to other natural phenomena like the El niño - La niña weather system. In the case of the creator-cruelty economy, ultimately it’s all based on chemical fluctuations in our brains.

It’s a really tough problem to think about, because we are all in the map so to speak, whatever actions we take, including thinking about the dynamic, affects the dynamic, and the balance.

A potential danger is that you get caught in one extreme or the other, without enough capacity to switch. And then you are probably in for a bad time. Smashed against the rocks, over and over again, in plain site.

-

The cruelty economy

web development programming

The Creator Economy has been pretty great.

So many things to watch and listen to. But that’s nothing compared to what’s coming down the pipe: The Cruelty Economy!

Creative Economy + Web3

Crypto, blockchains, memecoins, DAOs, cosplay, algorithms, Anon, AI, brain-computer-interfaces, WOW.

War and peace…AT THE SAME TIME!

Some of you are gonna love it! At least some of the time.

What could possibly go wrong?

With our patented starvation&thirst algorithm, nothing!

-

Cool things that were in web2.0

web development programming social media

Last week I listened to a John Gruber interview with Tom Watson and Daniel Agee, the founders of the photo sharing app Glass. They talked a lot about what it was like during web2.0, including some of the cool things that got developed in that period.

I enjoyed the brief trip down memory lane, an oasis of calm amongst the current madness all around. I spent a few minutes making a list of some of the things I remember, and enjoyed wondering how some of these types of technologies and trends could blend with things in web3.0.

Here’s that list:

- Rocketboom, Ze Frank, and videoblogging

- Citizen journalism

- Creative Commons licensing

- Crowd funding

- Community events and meet-ups

- The Maker movement

- 3D printing

- Photo sharing

- Video streaming

- Online maps and navigation

- Blogging and RSS

- Live photo feeds from events with photos displaying on your desktop as they are taken

- Journalism integrated with data analysis tools

- Social media

- Newsrooms integrating social media into their broadcasts

- Wikipedia

- APIs everywhere

- Web services - AWS, Azure etc

- Podcasting and podcast tools

- Open source hardware

- Open source software

- Github repos and actions

- Bit torrent for sharing assets with listeners to collaborate on

- User Generated Content

- Live video streaming mixed with forum/chat rooms with bots that trigger video and audio overlays when events like tipping occurs

- Gifs

- Memes

- Newsletters

- Weird one offs that never took off like Chat Roulette

Some of these were around before web2.0, but got very popular during web2.0 and arguably some were developed more recently but IMO feel very web2ish. Nonetheless when I think about web2.0, these are some of the things that come to mind.

-

My unsophisticated view on whether we have free will

philosophy blogging

People are always talking about this in podcasts and around the internet, and I suppose it’s all quite interesting, but I just wanted to have some clear thoughts about it myself. So I spent a few minutes thinking about it and this is what I came up with.

It’s probably completely wrong. I wouldn’t take this as life advice.

Do we have free will?

Yes. Because…

-

You have a sort of biological choice algorithm in your head, implemented from neurons organised in neural networks, and it develops in your brain over time, and at any one time that choice algorithm is used to make choices that act on the inputs your brain is receiving. The choice is independent from the inputs, rather than being somehow packaged up and smuggled into your brain along with the inputs

-

We have the ability to introspect, so in principle we have the ability to observe and see instances of unfree coercion, and change our thought processes accordingly - YMMV, every person’s situation is very different, some have a lot more external pressures than others, which isn’t always bad, but it definitely can be

-

If you remove everything, so you are an astronaut floating in the emptiness of space, you can still make choices

Therefore humans have free will. In this view, free will is very similar to independence of thought.

Feels kind of good to have that written down, at least I’ve got something I can point to now.

-

-

Offline

web development offline

Yesterday I wrote about the idea of offline pull requests. It wasn’t a fully formed idea, more of an observation that the current git tooling could improve the offline experience. In the modern, always on, always connected world, the idea of offline might sound a bit strange or out-dated. It might also seem a bit weird for a web developer to be interested in offline because developing for the web is all about being connected.

I like the idea of being able to move relatively seamlessly between online and offline. I’ve found though, that the offline experience is often lacking.

For example, Safari has a feature called Reading Lists. It’s cool and I’ve been using is a lot recently. With a couple of clicks you can add a page to your reading list. It’s s bit like a bookmark, but in addition to saving the url, it has the ability to save the entire page, so you can read it later offline. When it works, it’s amazing.

I’ve found that my workflow has changed significantly. Instead of endlessly infiniti scrolling, I can spend 10-15 minutes in the morning, skimming through stuff in my feeds, adding what looks interesting to the reading list, then disconnecting. At a later point in the day, or maybe the next day, when I have some time, I can read through the articles, make notes, have ideas, write blog posts etc. Awesome right, so what’s the problem?

There are a few. First and foremost it doesn’t work on all websites. I have no idea why that is, I guess websites have to be written in a special way for it to work. I haven’t had time to research this yet, I’d like to know why it only works for some websites. Another issue is that there is no indication that the site isn’t “offlineable”. You click “Save Offline” and it looks like it saved. Later you try to open the site while offline and you just get an error message, I forget the actual wording, but it feels a lot like “You can’t read that because you are offline, idiot”. You used the feature as advertised, and you still got a slap in the face. You keep calm, and carry on with your life. It happens a lot.

I found an option, buried deep in the app settings, that automatically saves each item you add for offline reading. Nice feature, definitely makes things better, but you still get slapped in the face witch the error messages a lot, because loads of sites just aren’t “offlinable”.

I wonder if website owners just don’t like offline because it blocks the click info they get when you are online, maybe it somehow affects their add revenue. With better tooling that’s an issue that could be solved.

It would be great if in addition to the saved page, each linked item in the saved page was also saved for offline reading. And wouldn’t it be cool if there was a way to click a button to get the current page, as well as a selection of other popular articles on that site.

Matt Mulenweg when in Antarctica, prepares for his trip by downloading portions of Wikipedia. Cool idea. I wonder how he does that, I guess he’s got a special Wikipedia reader tool. I’d like to be able to do that sort of thing in more places. Like when browsing a Github repo, save the site, but also save the associated documentation site. I wonder if Progressive Web Apps (PWAs) might be in some way a solution here.

Reading Lists are useful but they don’t work with video. I miss watching Youtube videos. With an offline workflow, there’s no way at the minute to watch video. In the early days of podcasting, people often put videos in their feeds, that was what videobloging was before Youtube. I’m not saying that’s better, I still like watching on Youtube, but I’d love it if there was a “Watching List” similar to how Reading Lists work.

Online experiences are great, but I like offline too. Podcasting has shown us that offline is worth considering, that it’s beneficial to our lives. Let’s imagine a future where offline and online are complimentary.

-

Offline Pull Requests

programming workflows

Aside from being a phenomenal version control tool, git’s ability to work offline is one of it’s best features. This is especially true if you move around a lot, but even if you don’t, sometimes you just need to disconnect from the network, avoid distractions from things like social media and email, and do some heads down focussed development.

Once you’ve made some progress, it’s trivial to sync back up with the repository remotes. Pull in the changes since you were last online, merge with your code, resolve any conflicts, and push your changes back up to the remote. This is possible because when you clone a repository, you have an entire copy of the repository on your local machine.

This way of working is standard with the command line git tool. It’s how it was designed to work. Each developer has a complete copy and can work entirely independently. This worked really well for many open source projects, but as git hosting platforms emerged, they added new features. One of the most praised has been the Pull Request (PR). It’s become so central to modern development, that most developer workflows in some way revolve around them.

They are essentially a way to co-ordinate ongoing feature work. They take the form of a web page that has a discussion thread where contributors can talk about the changes they are making to the code. They make many code commits to a feature branch on their local machine, push those commits to the remote, and at some stage a PR is created. Discussions then happen, more code commits can be added, and when the feature is deemed to be complete, it can be merged into the main branch and the PR is closed. At that point all the commits that were pushed to the feature branch will be in the main branch.

The PR has become more that just a discussion area. Many integrations with 3rd party tools enable running of test suites, with results displayed in the PR page along side discussions. There are other neat features like bots that can scan code, and post results to the discussion, code reviews, analysis of comments to see mentions of other PRs, and for example to trigger workflows. The automation features can help speed up development, and maintenance of the project. Overtime PRs become a key place where knowledge about how the code was developed is stored. It’s very usual to spend some time browsing through closed PRs to get a sense for how things are moving along, or how a particular bug was fixed. The PR has been very much a successful feature and has been adopted by most git hosting services.

It’s not to say that PRs don’t have their downsides. Some of these were highlighted in a recent episode of the Changelog podcast(~20:00). They speak about many of the pros and cons. It’s an interesting discussion. There has also been a “PRs are bad” meme making the rounds the past few weeks (but I can’t find it right now), and much has been written about the pain of PRs. We love PRs, but some of us, at times, find them an impediment to progress.

In my personal work I often want to be able to work entirely offline, but I miss the ability to write notes as I develop a feature. There isn’t much point in using PRs since they aren’t accessible offline. PRs are entirely provided by the platform, standard git has no such feature. Making PRs available offline would be an incredible feature, but it probably doesn’t make that much sense, because the threaded discussions would get all out of sync. I’d be happy to be proved wrong on this though.

Offline PRs would make moving between providers feasible. If you have ever tried to do that you’ll know that it’s not at all straight forward. Moving the repo is easy, moving the PRs and all the accumulated knowledge within them, not at all easy.

However with PRs acting more and more as a place to combine the results of many tools, I wonder if there couldn’t be some form of independent notes that couldn’t be written offline that could then be automatically attached to a PR along with the pushed code commits.

If I were developing git based tools, enhancing the offline experience would be something I would spend some time on. Working async is becoming the norm for remote teams, and though it’s great to work together and essential at times, the ability to drop off and work offline is something that is very important in order to be able to keep a healthy work/life balance, but also so that when you do get together with the team, that time is even more beneficial because you’ve been able to make much progress offline, without all stepping on each other’s toes.

-

Exploring iOS Creation Tools

ios & mac operating systems design

I recently had to re-install most of the main Apple iOS apps as the previous versions were all crashing on startup. While I was doing this, I took some time to look at the feature sets of these apps, most of which I never use. I was pleasantly surprised, there’s actually quite a lot you can do with these default apps that look like it could be very useful. A lot if the apps are quite minimalist, and have enticing design.

However functionality is not obvious straight away. I find that most of these apps don’t seem to follow typical conventions for where features are or how they are implemented. Each one appears to do things in a sort of unique way. The first 20-30 mins of playing with an app, I was constantly taping the wrong place, opening the wrong menu items, getting stuck and having to close the app and re-open it just to get back to a place I recognised. It’s way too easy to delete things in iOS apps, and there’s no undo. I’ve lost / nearly lost loads and loads of stuff accidentally deleting something when the touch UI started miss-interpreting my gestures, or accidentally making an unintended gesture. So it’s not obvious and learning is very frustrating.

Having said that it looks like the following things might be possible with standard Apple apps:

- Publishing ebooks (Pages)

- Recording and editing audio (GarageBand, Voice Memos)

- Recording and editing video (iMovie)

- Some basic automation (Shortcuts)

Being able to do all these things from a mobile device would be awesome.

The design of these default apps have a very “Apple” look and feel to them which is great. However I’m a bit disappointed that documentation and marketing pages are a very scattered. The default selection of apps is actually quite good, but I don’t get the impression that Apple is taking them very seriously. Each one should have a canonical page on the website and there should be downloadable documentation. The whole offering feels more like a shabby patch work than a suite for creators. It’s like they did all the hard work of building the restaurant and then gave up right before creating the menus.

Anyway, in my experiments with GarageBand, though making music is probably a bit optimistic for me currently, recording an audio podcast might be possible. I’d like to be able to record audio segments and drop them into some form of template, and render out an episode, complete with intro and segment audio jingles.

I’m guessing the whole template thing probably isn’t possible, but having a rudimentary way to put together a podcast from some audio clips might be.

Speaking of which, wouldn’t it be awesome if you could add annotations in the Podcasts app while you were listening to a podcast, and a way to easily crop out short clips, so that you could insert them into a podcast you were creating?

I like the idea of being able to have an async conversation via the medium of podcasts, for fun but also could be very useful in a work setting too. Anyhow just wanted to mention briefly my recent experiences with iOS apps, frustrating, but I can see potential possibilities.

-

Nginx and the Ukraine-Russia war

programming open source

With the war in the Ukraine unfolding, I started wondering about tools and libraries that might be affected by the crisis. What happens to open source projects that are caught in the cross fire of war?

The first such item that sprung to mind was Nginx. It’s used by an enormous amount of the modern web as a reverse proxy and load balancer.

Nginx is one of the biggest open source successes in recent memory, and as far as I know it’s developed by russian based developers. Looking at their website it appears they self host all development rather than use a git SaaS platform like Github.

Nginx has already been in the news in the past couple of years for similar issues. Thankfully the code is open source, but it’s clear that developing safe and reliable software for a world wide ecosystem is not very straight forward.

With the US and many countries imposing sanctions on Russia, how will that affect the open source communities online?

I don’t have an answer to that question, but it’s something I’m looking out for.

My best wishes to both Ukrainian and Russian developers, I hope you aren’t caught up in the madness of war.

-

The Mirror (A poem of cruelty)

blogging writing poetry

They: Be the mirror!

I: I won’t be the mirror!

They: we hate that you won’t do what we say, so we hate you!

[some time passes]

[everybody is a bit sad]

They: what do we do?

I: I don’t know

…

[some time passes]

…

They: Be the mirror!

I: I’m the mirror!

They: we hate the mirror, so we hate you!

[some time passes]

[everybody is a bit sad]

They: what do we do?

I: I don’t know

…

[some more time passes]

…

I: I guess I’ll go then, again. Maybe some others have food that I can eat and water that I can drink, so that I don’t die

###

Update: This poem will be the last that I ever write so as to try to avoid even more abuse than is currentky beibg inflicted upon me. Cruelty should not be encouraged. Cruelty in the world is very real, I am absokutely certain if that now.

Update: Lots of very unnusual internet connectivity issues today

-

Next generation tools and workflows for the creator economy

blogging writing web development programming javascript

There was an Interesting Smashing Magazine piece earlier in the week, Thoughts On Markdown. It does a really good review of the transformative effect Markdown has had in tech, especially by developers, but also by creators.

Markdown unlocked a whole ecosystem of workflows, that have been generally centered around git version control platforms such as Github. Reason being that they now all offer CI/CD tools, i.e ways to run shell programs that can do things to and with the files in your repository when you do different actions like push/commit/merge files.

I wrote about this trend a little over a year ago in my piece about Github Actions for custom content workflows. I later wrote about Mozilla MDN Docs are going full Jamstack, which was a high profile example of the trend. I was seeing in my own work how the combination of automation, git version control and a simple authoring format were transformative in what I was able to do. I think the fact that Mozilla went all in on such a workflow is a big sign of things to come.

Along with the praise about the impact, the Smashing piece’s thesis is that although good things have come about because of markdown, that it isn’t well suited for editors, writers and creators. They go into a lot of depth in their article, it’s really worth the read.

I’m sympathetic to their point of view because although it’s fantastic for quickly writing documentation for coding projects, I have found it a bit tedious for writing, but especially editing essay style pieces.

I love how easy it is in Markdown to add URLs, lists, for boldening/italicising text, and for adding titles and sub headings. Guess what you can also just add HTML directly in the file for those situations where the syntax falls short. All that is great, and it doesn’t bother me that you end up with some slightly ugly syntax scattered through the text. I’m fine with that, in fact for URLs you get used to it very quickly, and it’s actually, in my opinion, argusbly better to be able to see the full URL text, so you can easily spot mistakes before publishing, and it encourages you to favour well written URLs. It’s often overlooked because it’s somewhat subtle, but structured and nicely formatted URLs make browsing and sharing on the web a much nicer experience.

The biggest annoyance for me is in the editing, because the way corrections are displayed in a Github PR are sort of close to unreadable. Each paragraph is one line in a markdown file, and if you change 1 word, the entire paragraph is highlighted in a way that you lose the flow of the whole document. I’m constantly having to preview, commit, review. It’s that feeling where you can’t see the woods for the trees, and it makes writing prose much more difficult than it should be.

The topic came up in a recent Shop Talk Show. Dave is keen on exploring Markdown editors when he’s old and Chris is bullish on block editors from his mostly very positive experience with Wordpress’s newish Gutenberg editor. He’s always going on about it (in a good way), so there must be something to it.

And that’s were the Smashing piece ends up, talking about the concept of block editors, which I am totally unfamiliar with. It sounds interesting, though I wonder how much of the automation and collaboration features of a Github+Markdown workflow you would lose by moving to block editors. Also, and this is a big one: portability. I personally am willing to put up with some of the annoyances of Markdown because, I know it works everywhere that supports git repos. Where is the portability in block editors?

There are signs that some of the benefits of modern webdev blocks and components are making their way into Markdown, with for example Mdx, which is a markdown variant that makes it possible to embed React and Vue components directly in your markdown files. So maybe we will get both markdown and block editing in the new embedable web that the folks at Smashing envision.

It’s a topic that I’m watching closely because it will have a big effect on what I like doing. I’ll finish with a Markdown Pro/Cons list as I currently see it:

Markdown Pros:

- Portability

- Collaboration

- Git friendly

- Compatibility

- Huge ecosystem of tools

- Being able to stick it in a PR, then stick it into a workflow

Markdown Cons:

- Not the best writing experience

- Lousy editing experience

-

Changes to the blog and newsletter

blogging web development linkblog programming javascript newsletter

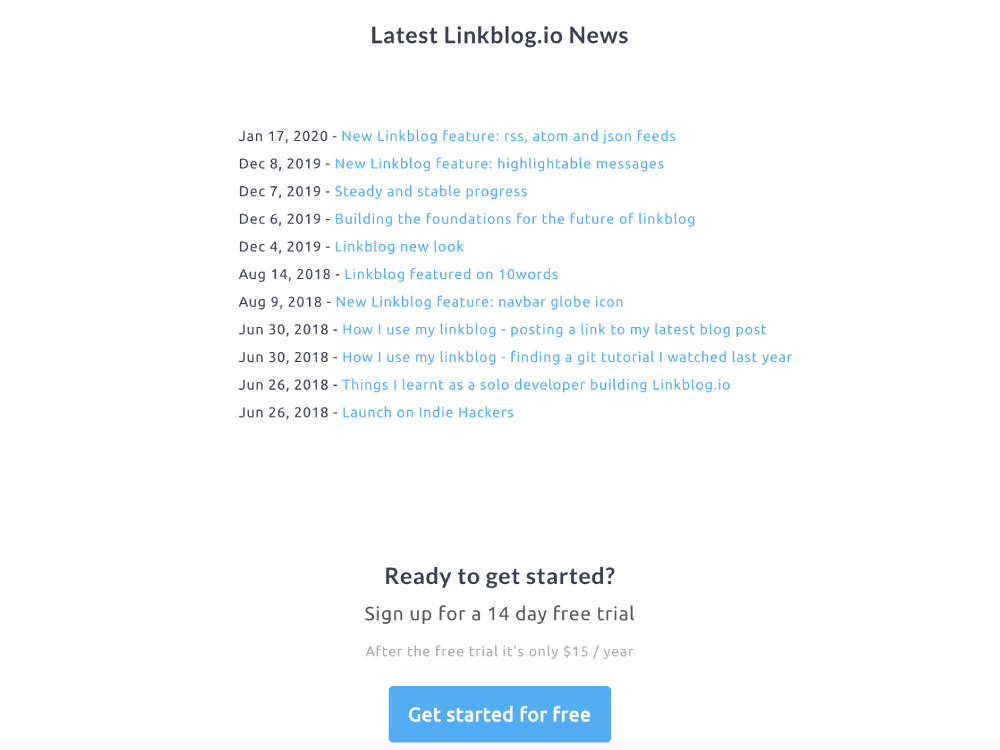

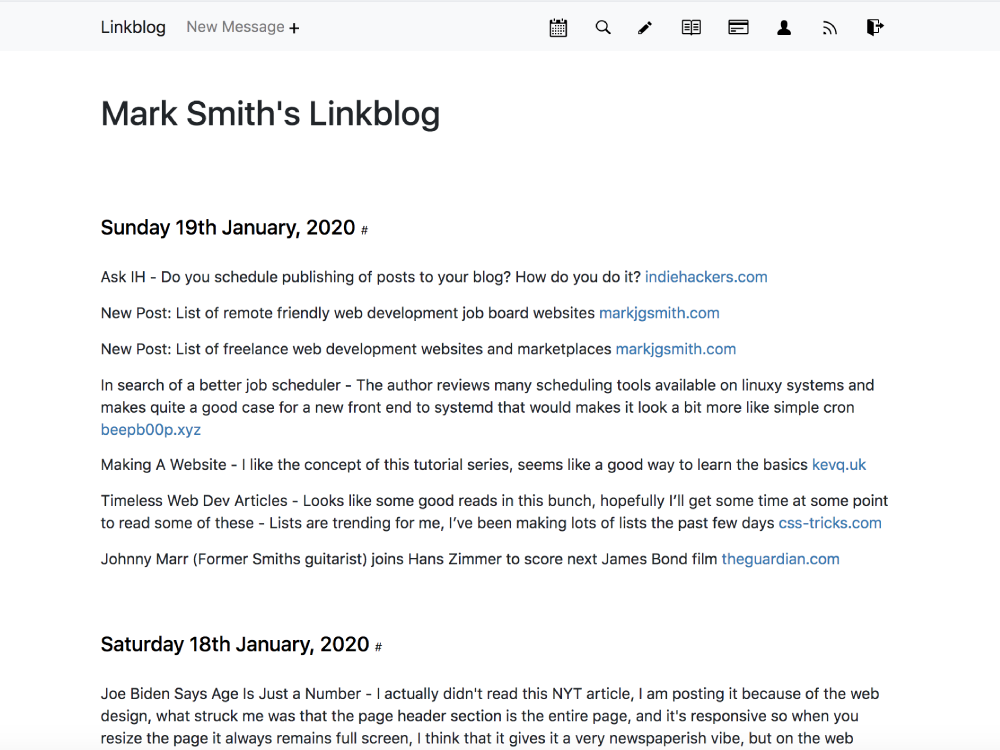

Towards the end of last year, I stopped posting to the linkblog. It wasn’t by choice, I still enjoy reading and curating content, but life configured itself for me in such a way that it was basically impossible to do in any meaningful way. I had just reached the 10 year anniversary. I’m glad I made it that far, but the world said no, loud and clear.

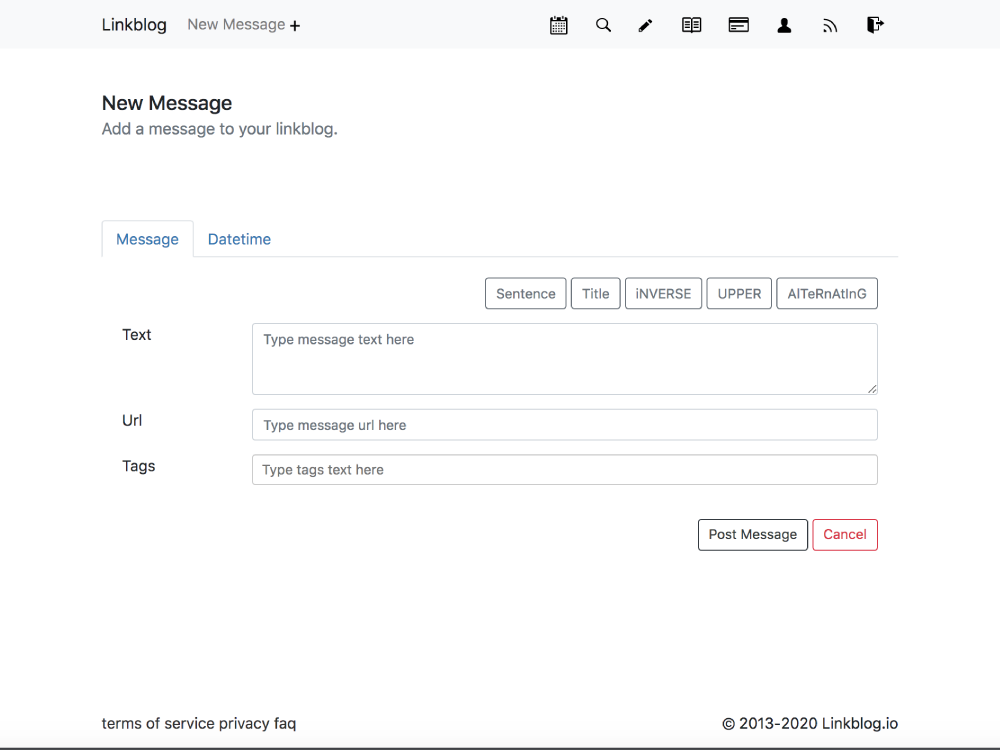

I have no way to post privately using the linkblog tool. I never had the chance to build that feature. It was on the roadmap, but there were always more important features that popped up, and I had made the decision to implement that feature later to try to make things a bit easier. There was already so much to worry about, and it seemed to me that storing less private data would eliminate a whole class of security concerns for my little sass web application.

With no links, I didn’t have any material for the newsletter, so that had to be put on hold. I eventually did send out a short new year’s edition. It was fun putting it together, and to be honest I quite liked the brevity. I put out another, and another. I also started adding a short unique one line title to each edition. The idea was to have something memorable, a sort of virtual hook to hang it on, so it doesn’t just fade into obscurity of time. I’m liking the new format.

I also have a bit of a goal of writing more blog posts, but I want it to be fun, and not get too stuck in crafting the perfect post each time. I might check out some writing tools I keep hearing and readibg about like Grammerly and Hemingway at some point. Until then though I’m just going to try writing and editing quickly. So expect the posts to be a bit rough around the edges, with spelling mistakes, and annoying repeated bits. Sorry. Those bits annoy me too, but I think quantity and general narrative is more important than minutia at the minute. Hopefully my writing will get better over time. It’s a somewhat different way of thinking to writing code, and my brain just doesn’t do it all that well yet.

I’m still liking the idea of publishing on Saturday, but I’m going to be more flexible. I might sometimes do a week day issue, and I’m not commiting to every Saturday, life is too darn complicated at the minute. I’d love to have a consistent schedule, everyone says that’s the best way to increase readership, but it’s just not realistic for me right now. It’s the “if the world allows” attitude, I’m going to try but I’m not going to kill myself over it.

Ok that’s it, nothing Earth shattering but I’m feeling good about the changes. I hope you like the sound of them.

Update - Wow I just noticed how many 2s there are in today’s date. Hello world! 🤷♂️

TODO add links when world allows -

Component and configuration based UIs

blogging web development linkblog programming javascript

The past few days, following on from my investigations into the rendering process of modern javascript frameworks, I’ve had component and configuration based UIs on my mind. I’ve been wondering what the big deal is, why all the fuss, when it seems to just boil down to co-locating your templates and code together.

I kept thinking there must be more to it. But then I thought, what if that really is all there is to it, there are probably other reasons, but what if it is just about co-locating the templates and the code, would that be so bad?

One major annoyance with templating is making sure all the functions you are using in your templates are in the render context at render time. If any of them aren’t there, the rendering is going to bomb because the template library will do exactly as you have written in your template logic, except some of the functions you told the library to call just aren’t there, and when your template reaches one of those, it results in a runtime error. If you don’t use many functions in your templates, this is likely a non-issue. On the other hand if you do, then it is a pain you face.

The way it’s done with regular template rendering, is that you have to look down your entire template hierarchy, note down which functions get used, and pass these into the top level template, as part of the data object, and you have to make sure that each time you include a template/partial, to pass the right functions into the render method invocation for that template/partial. So again, if you don’t use many nested templates, it’s probably a non-issue. But if you do, then welcome to code bombsville.

For people that write highly nested, highly functioned templates, collocating code would perhaps be beneficial, just to make preparation of render contexts less error prone.

With all that in mind, I decided to just try adding component rendering to my ssg in a feature branch. Worst case is that it doesn’t work and I could just delete the feature branch.

I thought about it for a few hours, looking at my code, looking at javascript’s implementation of classes, which bundle functions, over and over again. It’s still very new code, so I wasn’t totally sure about it, but I think I could see a way to modify the ssg that would result in component rendering.

It was simple enough, just add a renderComponent method to the renderer, and that method would grab all the functions from the passed component, merge them into the data object, and pass that into the regular render method. Could it be that simple?

renderComponent(component, data) { // Add component functions to render context const templateBody = component.getTemplate(); const templateData = Object.assign({}, component.getData(), this.getComponentFns(component)); return this.render(templateBody, templateData); } getComponentFns(component) { return Object.getOwnPropertyNames(component) .reduce(function(acc, current) { acc[current] = component[current]; return acc; }, {}); }In the javascript community such implementations are often referred to as syntactic sugar. Might be the same in other language communities, I don’t know (update - turns out it is the same in other programming languages). Essentially the underlying process remains the same, but a new structure is available which is useful in many situations. Examples of this approach are Classes, syntactic sugar on top of prototype based inheritance, and Async/Await which still uses traditional callbacks underneath, but via promises.

It’s pretty clear to me that if you can write your template alongside the functions it uses, and be sure that at render time, all the functions will be available, then that’s a net positive. And it might very well be that there are other, more frontend centric reasons to use components.

Anyway, guess what, it worked!

It seems sort of obvious now, but sometimes when you are in the fog of technology, mental models, implementations, buzzwords, build tools, peoples opinions, and just the complexities, mutilations, humiliations, thirsts, hungers, dirtyness, impossible situations, contradictions, blocking, pain, starvation, cruelty and madness of life, it totally doesn’t seem obvious!

In the end I added about 10 lines of code, and a very simple Component class, and it worked, the component I included in my template rendered! And since my ssg has a way to define custom renderers, most libraries that support partials/includes should now be able to render components. It’s early days and I havent done much testing, but it seems as though it is possible.

It was quite a moment, when I ran the code, I sort of knew it would work, but it was still a pretty big wow moment. I was like, “wow it worked”. That doesn’t happen very often in programming, at least not at that scale.

Regular template rendering still works as before, and for templates that don’t use lots of functions, that’s probably going to be the better, simpler way to go. But if you do write templates that use lots of functions, using components is a neat way to bundle them with your template, so that at render time the functions are right there in the context, no need to do any special preparation.

The only current limitation is that your top level can’t be a component, it has to be a template. The ssg renders components inside templates. There might be a way to have top level components in the future, I haven’t figured out an easy way to do that yet.

One other thing, not related, but related, if there is one thing I have learnt here in Vietnam, it’s that the people are cruel, and their cruelty will only increase over time. This is my opinion that has been formed after living here several years. They aren’t all cruel, but when all is said snd done the net cruelty only increases. I really feel I have to say that now, while I still am able.

They should consider renaming it the People’s Cruelty of Vietnam. It would be a much more accurate description of the place.

-

What’s up with templating in modern javascript frameworks?

blogging web development linkblog programming javascript

I’ve been writing a static site generator recently. It’s a rewrite of a utility that I use to build my statically generated linkblog. That utility has been working well, but it’s implementation isn’t exactly very elegant. It was, after all, my first attempt at building the linkblog statically, which had previously been a typical MongoDB backed Express app.

I’ve been really delighted using many of the latest javascript features. Specifically Classes, Array functions (especially map and reduce) and Async/Await. That combination IMO has dramatically improved the development experience. The code is massively easier to reason about, and just looks so much better.

class Dispatcher { constructor(app, cfg, renderers, collections, srcDir, outputDir) { this.app = app; this.cfg = cfg; this.renderers = renderers; this.collections = collections; this.srcDir = srcDir; this.outputDir = outputDir; this.renderersByName = undefined; this.renderersByExt = undefined; this.outputPaths = []; } async init(templatePaths) { debug(`Initialising dispatcher with templatePaths: [${templatePaths}]`); // Arrange things for easy selection this.renderersByName = this.getRenderersByName(); this.renderersByExt = this.getRenderersByExt(); for (var i = 0; i < templatePaths.length; i++) { // ... do some rendering ... } } getRenderersByName() { const renderersByNameReducer = function(acc, current) { acc[current.name] = current; return acc; } return this.renderers.reduce(renderersByNameReducer, {}); } // ... }The latest ssg tool now has all the basic features I need to rebuild my linkblog. It uses what I call “old school templates” to render all the pages. In my case it’s EJS, but it could work equally well using any of the many other template libraries like handlebars, liquid, mustache etc. I wanted to get that working before venturing into rendering pages using some of the more “modern” component based javascript frameworks like React, View and Svelte.

This past week, I’ve been looking at these frameworks more closely, trying to figure out how the rendering works. I’m trying to get a sense of what modifications I’ll need to make to my ssg so that it can generate component based websites. I’ve read so much about these frameworks over the years, it all sounds so wonderful, but also quite mysterious.

With old school templating rendered server-side, though there are lots of peripheral features, it essentially boils down to passing a template string together with an object containing data to the templating library. The library takes the data and the template string and gives you back an HTML string, a.k.a the rendered page. You write that to a file. Rinse and repeat, upload all the files to your CDN / hosting provider, and that’s your site live.

debug('Renderer fn - ejs: rendering template'); const renderedContent = ejs.render( templateBody, context, options ); return renderedContent;The template libraries have lots of neat features to make it easier to create your pages. One such feature is the ability to include templates inside templates. You create templates for small pieces that you can reuse across all your pages. On my Jekyll based blog for example, I have among others, includes for the Google analytics snippet, as well as messages promoting my development services that appear on each page. I can update the included templates and the messages update across all the pages that use those templates. The feature is sometimes called partials, it’s been a standard feature of ssg’s for many years. Each library has a syntax for describing the include that you insert directly into the template HTML, and you often additionally pass the include a data object which is used by the library to render the included template.

<h1>Hello World!</h1> <h2>Some included content:</h2> <%- render(includes.helloIncludes, locals) %> <h2>Some regular content:</h2> <p><%= locals.loremipsum.content1 %></p>It’s worth noting that in most implementations you can pass functions in the data object, and use those functions directly in your templates. The libraries execute these functions as part of the rendering of the page.

The templates contain the logic for creating the HTML files, your javascript code which you use to fetch and prepare template data is in separate files. What about the modern frameworks, what are they doing?

Well there’s a lot of buzzwords and fancy sounding terminology, but after reviewing several component based projects, it seems to me they are doing essentially the same thing, except the template code has been moved into the javascript files inside a return statement, components are essentially the same thing as includes, and even props, which is one of the key concepts are really just the same as the data object that you pass to includes. All this mystery, and really it’s just another template library. I’m probably missing some crucial detail, but after a few days of digging, that’s what I’m seeing.

class ShoppingList extends React.Component { render() { return ( <div className="shopping-list"> <h1>Shopping List for {this.props.name}</h1> <ul> <li>Instagram</li> <li>WhatsApp</li> <li>Oculus</li> </ul> </div> ); } } // Example usage: <ShoppingList name="Mark" />There must be something else that’s useful over and above collocating related code together. At the present moment I personally find that sloping everything together causes me more confusion, because it’s difficult to conceptualise where the boundaries of the app are located. It’s just this vast amorphous everything code, instead of code that’s divided into rendering logic and data preparation logic.

I’m aware that I’m coming at this from a very server-side way of thinking about things, and probably component based websites make a lot more sense once they are running in a browser environment, with event handlers and the like, but nonetheless, I find these observations interesting, even if no one else does.

What’s the major advantage that you get from sticking the template into the javascript?

-

Static site generator development continues...

blogging web development linkblog programming

Here are the notes I mentioned in my previous post. You’ll get some idea of what I’ve been up to in my personal development projects, even if it’s not a nicely crafted piece, I’ve made some progress on my static site generator, and I wanted to blog about it. Still blogging… :)

- Initial version which I wrote as a sort of life raft when the linkblog.io ship was sinking

- Jamstack, serverless, Netlify, Github Actions, CI/CD, and git repo powered development were all becoming super popular

- It’s running my current linkblog, it works, it’s all running in the cloud, quite awesome

- But…the code is kind of fragile

- quite a lot of duck tape

- the structure of the code is convoluted in places

- lots of callback hell

- hey async/await was new when I wrote it and I hadn’t gotten comfortable with it yet

- It uses plain javascript objects rather than classes, which is mostly fine, but I’ve seen quite a few implementations of tools that use classes

- it’s much clearer to me now how to think about and mentally manipulate such concepts

- there’s a lot of flexibility and structural benefits when you have the right abstractions

- you can more easily get out of the weeds in some places

- You can more easily build something that can be refactored to suit your current needs

- Classes do present a new set of challenges though, but on balance, I feel like they are the right structure to be using a lot of the time

- I wrote an initial version back before the big lockdown happened, I had been thinking about it for a while and was able to get something working over a few days

- The main ideas were

- Just render templates, stick to EJS and Markdown, keep it simple

- Path base page routing

- Maybe rendering websites using templates could be similarish to how a render farm operates, something I have a lot of experience from my time in the feature film visual effects (VFX) industry

- I had been reading a lot about various more formal data structures, and it was clear to me that a queue would be beneficial to handle all the template rendering

- Just get something working that runs locally, but maybe if the architecture was done right it would be easy to get things working in a serverless environment later

- Initial specific requirements for phase 1 - Implemented these crucial examples very early, and so can easily check that structural changes don’t break these

- EJS using data files

- EJS with includes

- Markdown

- Phase 2 implemented after the big lockdown, once again I’d been thinking about the best structure for many weeks and was able to get these changes made over 3-4 days

- A set of core components have emerged that feel really nice, a structurally sound way to render templates, with possibility to extend functionality

- Things I have in the back of my mind

- How to handle config

- How to handle more advanced template rendering, for instance how to render many different output pages from a single template, with some kind of iteration logic, necessary for rendering the linkblog calendar folder structure

- Concentrate on EJS, but try to architect a solution where different ways of rendering templates could be accommodated in future, at least try to go in that vague direction even if it’s not a completely working multi-rendering solution at first

- Create the right abstractions so that changing template rendering input and output locations is trivial (i.e. local file system, S3 like cloud storage etc)

- Inspiration

- Jekyll - the ssg I currently use for my blog

- Eleventy - awesome ssg that uses classes in a really nice way, great community

- Pixar’s Renderman - the standard for running large scale render farms in the VFX industry

- Render farms - [memories I have of my time working with VFX render farms, mostly sysadmin/devops stuff, but also some of software development

- Serverless and Jamstack the shiney future at the end of the tunnel

- Wordpress, & open source

- Open source

- Something I’ve wanted to do for many many years

- There’s been a lot of Linux in all my previous jobs, I feel like I’ve been living and breathing in open source in one way or another all throughout my career

- But I must mention though that the closer I get to having something that I feel is good enough to ship, the more ham strung and hands tied behind my back the world around me has me feeling, not so great considering the whole point of open source

- Which license to choose, MIT, GPL or Apache, or something else

- How to handle the competing, often contradictory aspects of the intersection between developing software in the open, and having a balanced personal life

- Somewhat esoteric, feels like I should mention, without dwelling on them for too long at this point in time

- Why does it feel like the choice of open source license somehow impacts the freedoms I experience in my personal life?

- Why does it always feel like right after I do one of these 3-4 days development sprints on a personal project, that I get absolutely clobbered by the world around me? It’s like clockwork, happens every single time, I’m not particularly religious, but is this the metaverse of the future, appearing in the present? Seriously, it really worries me, and especially that it’s impossible to talk about without sounding like you’ve lost your marbles

- The try to stay alive cascade

- survival

- self-preservation

- try not to negatively affect others

-

Hi it’s me. I’m still alive.

blogging web development linkblog programming

I just wanted to say hi and wish a happy new year to all.

The world is pretty complicated for me at the minute, I ended up in some sort of metaphorical/metaphysical (but in a lot of ways, very real) alive/dead loop in my life, so I just stopped posting because I sensed that it was going to continue for a while, and the thought of what that would look like from the outside had me thinking “No thank you very much”.